Federated multi scale vision transformer with adaptive client aggregation for industrial defect detection

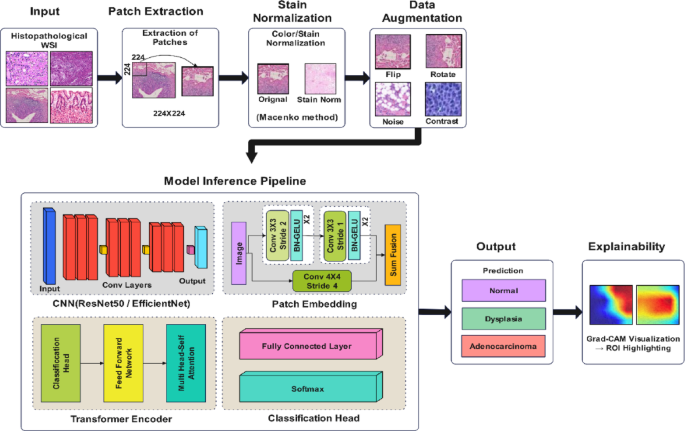

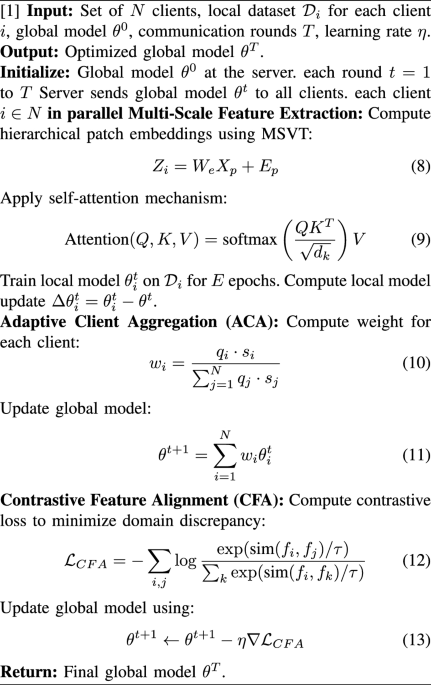

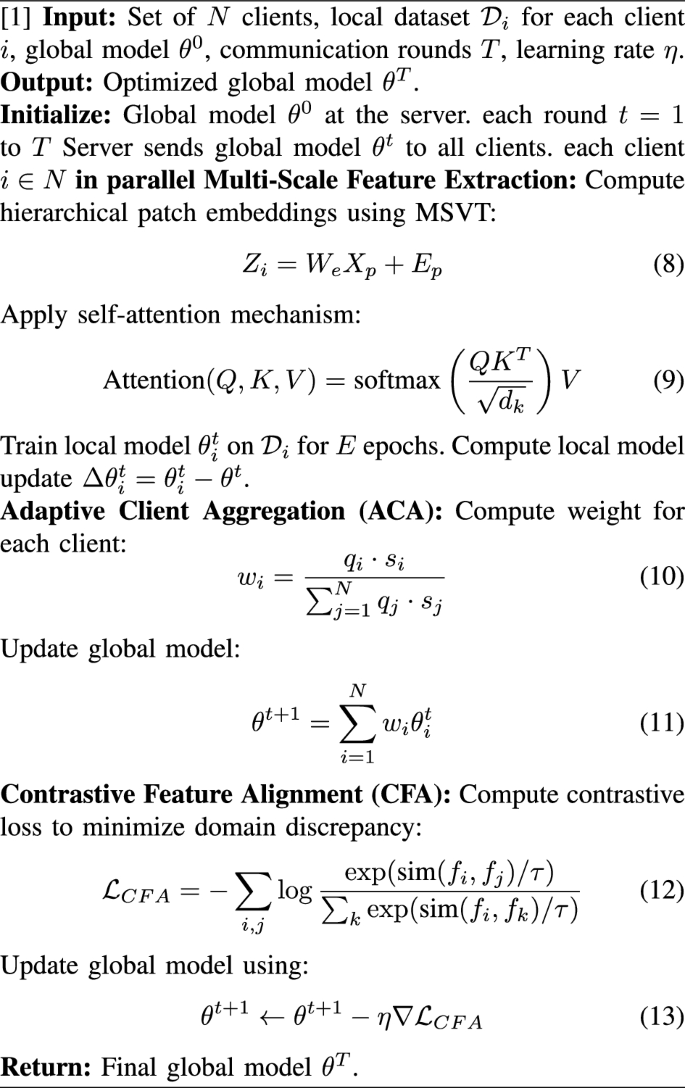

The proposed Federated Multi-Scale Vision Transformer with Adaptive Client Aggregation (Fed-MSVT) is a novel federated learning-based defect detection framework for industrial applications. It integrates multi-scale Vision Transformers (MSVTs), adaptive client aggregation (ACA) and contrastive feature alignment (CFA) to achieve accurate, privacy-preserving, and scalable defect detection.

Multi-scale vision transformer (MSVT)

Vision transformers for defect detection

Vision Transformers (ViTs) excel in modeling long-range dependencies but struggle with local defect detection. To address this, the MSVT module captures hierarchical defect representations by operating at multiple spatial resolutions.

Mathematical formulation

Let \(X \in \mathbb {R}^{H \times W \times C}\) represent the input image, where \(H\) and \(W\) are the height and width, and \(C\) is the number of channels. The image is divided into non-overlapping patches of size \(P \times P\), yielding patch embeddings:

$$\begin{aligned} Z = W_e X_p + E_p \end{aligned}$$

(1)

where \(X_p\) is the flattened image patch, \(W_e\) is the learnable embedding matrix, and \(E_p\) is the positional encoding.

The self-attention mechanism is given by:

$$\begin{aligned} \text {Attention}(Q, K, V) = \text {softmax} \left( \frac{QK^T}{\sqrt{d_k}}\right) V \end{aligned}$$

(2)

where \(Q, K, V\) are the query, key, and value matrices, and \(d_k\) is the dimension of the keys.

To capture multi-scale features, we introduce hierarchical pooling, progressively downsampling features while preserving critical defect details.

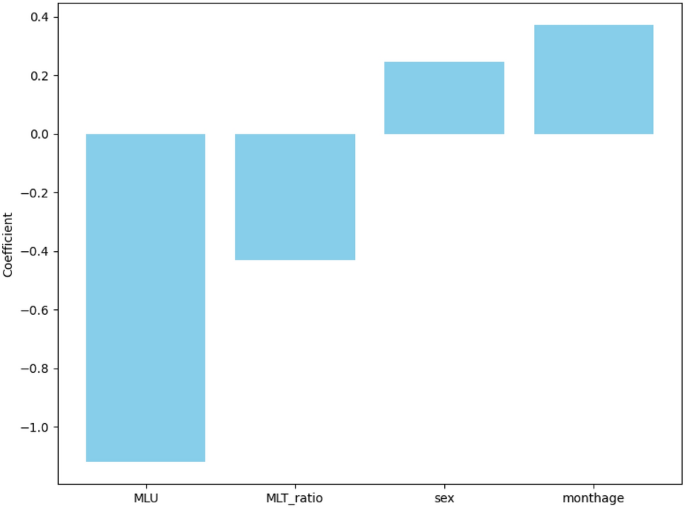

Need for adaptive aggregation

Standard federated learning models such as FedAvg aggregate client updates using simple averaging, which is often suboptimal in the presence of non-IID data distributions and heterogeneous data quality, especially in industrial anomaly detection scenarios. To overcome this limitation, we propose an Adaptive Client Aggregation strategy that assigns dynamic weights to each client based on three key factors:

-

Data quality (\(q_i\)): Derived from each client’s local validation accuracy.

-

Update stability (\(s_i\)): Measured as the inverse variance of model parameter updates.

-

Domain shift similarity (\(d_i\)): (Optional) Measures the alignment of local data distribution with the global feature distribution.

The aggregation weight for each client is computed as:

$$\begin{aligned} w_i = \frac{q_i \cdot s_i}{\sum _{j=1}^{N} q_j \cdot s_j} \end{aligned}$$

(3)

The data quality score \(q_i\) is calculated as the pixel-wise validation accuracy of the local model evaluated on a small held-out validation set (typically 10-15%) from the client’s local MVTec-AD data. The validation accuracy is defined as:

$$\begin{aligned} q_i = \text {ValAcc}_i = \frac{TP + TN}{TP + TN + FP + FN} \end{aligned}$$

(4)

where \(TP\), \(TN\), \(FP\), and \(FN\) represent the number of true positive, true negative, false positive, and false negative pixels respectively, compared against the ground truth anomaly segmentation masks provided by MVTec-AD.

The update stability score \(s_i\) reflects the smoothness of training and is computed as the inverse of the parameter update variance across consecutive rounds:

$$\begin{aligned} s_i = \frac{1}{\text {Var}(\theta _i^t – \theta _i^{t-1})} \end{aligned}$$

(5)

Finally, the global model is updated using the weighted aggregation of local parameters:

$$\begin{aligned} \theta ^t = \sum _{i=1}^{N} w_i \theta _i^t \end{aligned}$$

(6)

This adaptive scheme prioritizes clients with reliable data and stable learning dynamics, thus enhancing robustness and generalization in federated anomaly detection.

Contrastive feature alignment (CFA)

Addressing domain shift

Industrial datasets exhibit domain shifts due to variations in defect patterns and imaging conditions. To mitigate this, we introduce contrastive learning-based feature alignment.

Contrastive feature alignment and loss formulation

To reduce inter-client domain shifts and enhance anomaly separability in the feature space, we introduce a Contrastive Feature Alignment (CFA) module as an auxiliary training objective in Fed-MSVT framework. The goal of CFA is to promote the alignment of features from similar (normal) samples and enforce separation from anomalous ones across different clients, thereby improving generalization in the global model.

Given two feature embeddings \(f_i\) and \(f_j\) from normal samples of different clients (positive pairs), and embeddings \(f_k\) from anomalous samples (negative pairs), the contrastive loss is formulated using the InfoNCE objective:

$$\begin{aligned} \mathcal {L}_{CFA} = – \sum _{i, j} \log \frac{\exp (\text {sim}(f_i, f_j) / \tau )}{\sum _{k} \exp (\text {sim}(f_i, f_k) / \tau )} \end{aligned}$$

(7)

where \(\text {sim}(f_i, f_j)\) denotes cosine similarity between embeddings and \(\tau\) is a temperature scaling parameter. The CFA module is only active during training and helps cluster normal samples more tightly while pushing anomalous representations apart in the shared embedding space.

Contribution to Anomaly Detection While traditional pixel-wise loss functions optimize spatial accuracy, they do not explicitly enforce semantic separation in latent space. The inclusion of CFA addresses this by regularizing the feature space such that:

-

Features of normal samples from different clients are pulled closer, enhancing consistency and generalization.

-

Anomalous features are pushed further away, improving separability and localization of subtle defects.

An ablation study provided in Table 2 demonstrates that removing CFA results in a performance drop (e.g., AUROC decreases by 1.3%), confirming its effectiveness.

The Contributions of Fed-MSVT: The proposed Fed-MSVT framework integrates the following innovations

-

Learns both fine-grained and global defect representations using multi-scale vision transformers, outperforming conventional CNN and single-scale ViT baselines.

-

Employs adaptive client weighting based on data quality and stability, improving federated aggregation under non-IID conditions.

-

Incorporates contrastive feature alignment to mitigate inter-client domain shifts and enhance latent feature discriminability.

These contributions collectively improve accuracy, privacy, robustness, and scalability in industrial anomaly detection across diverse environments.

Federated Multi-Scale Vision Transformer with Adaptive Client Aggregation (Fed-MSVT)

The proposed Fed-MSVT framework significantly advances industrial defect detection by integrating multi-scale vision transformers, adaptive federated learning, and contrastive domain adaptation. Experimental results demonstrate superior accuracy, robustness, and scalability, making it a promising solution for real-time smart manufacturing.

link