A deep learning approach to optimize remaining useful life prediction for Li-ion batteries

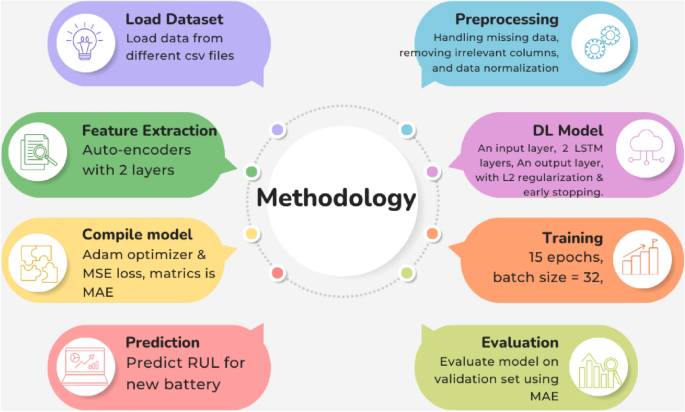

This section presents the details of the proposed predictive model, AccuCell Prodigy. The AccuCell Prodigy model includes auto-encoders and LSTM layers, to significantly enhance the accuracy and efficiency of RUL prediction for Lim-ion batteries. The name “Prodigy” embodies the model’s exceptional predictive capabilities, while “AccuCell” reflects its precision in estimating battery life. The first step in our research involved data preparation. We obtained a dataset consisting of features related to the operational conditions and performance of lithium-ion batteries. This dataset was divided into two parts: features (X) and proxy RUL labels (y). To evaluate the performance of our model, we split the data into training and testing sets using an 80-20 split ratio. This allowed us to train the model on a subset of the data and assess its performance on unseen data. Figure 2 shows the workflow of the research methodology.

Overview of research methodology.

Data sources

The dataset used in this study consists of data from three different cells. The dataset was provided by Dr. A. R. Kashif from the Electrical Engineering Department at the University of Engineering and Technology (UET) Lahore. The data was originally obtained by Areeb, a Ph.D. student under Dr. Kashif, who conducted extensive experiments on two different Li-ion cells, resulting in 22 variations in parameters. Additionally, data for one cell was sourced from Millat Factory, Pakistan.

Sample data and attribute details

The dataset includes various attributes essential for battery performance analysis. Table 2 there are a few sample data entries with detailed attribute descriptions.

The ‘Cell Type’ indicates the type of lithium-ion cell used in the experiment, ‘Parameter Variation’ is the specific experimental variations applied to the cell, ‘Voltage (V)’ shows the voltage reading of the cell, ‘Current (A)’ refers to the current reading during the experiment, ‘Temperature (\(^\circ\)C)’ shows the temperature at which the experiment was conducted, ‘Capacity (Ah)’ shows the capacity of the cell measured in ampere-hours, and ‘Cycle Life’ is the number of charge-discharge cycles the cell can undergo before its capacity degrades significantly.

This dataset enables a comprehensive analysis of the performance and longevity of lithium-ion cells under various conditions. The attribute details help in understanding the specific factors influencing battery performance.

Data preparation

First of all, there were some columns in the dataset that were not required or necessary for our scenario like vendor, counter, absolute time, and relative time min. That’s why these columns were dropped. Missing values in the dataset were handled using a mean imputation strategy. Then Sample 1 was reserved for the test dataset and while other 2 samples were used for the training dataset. After that normalization was done using MinMaxScaler. This class is used to scale data to a range between 0 and 1. This calculated the minimum and maximum values for each feature in the training data. Once the scalar has been fitted, it is used to transform the training and testing data. This scaled the data to a range between 0 and 1.

Feature extraction

Feature extraction is conducted using an auto-encoder neural network, specifically designed for the dimensionality reduction of the input dataset. The encoding layers, employing ReLU activation functions, effectively reduce the feature space dimensionality while retaining crucial information. This compressed representation, generated by the encoder, serves as our feature extraction mechanism. The decoding layers, although present, are primarily tasked with reconstructing the original feature space and are not the focal point of our feature extraction efforts. To optimize the training process, we implement a learning rate schedule and employ the Adam optimizer with a predefined learning rate. This approach streamlines the extraction of relevant features from the input data, bolstering performance in subsequent predictive tasks. Additionally, we ensure training stability by incorporating learning rate scheduling, with training encompassing a set number of epochs and a batch size of 16 for concurrent processing of multiple data samples. Throughout the training, we monitor progress via recorded training history, including performance metrics and loss values.

The input features as detailed in Table 2, include a comprehensive set of battery parameters such as current, voltage, energy, temperature, and internal resistance, which are critical for predicting the RUL of Li-ion batteries. The output is a streamlined 6-dimensional representation capturing the most relevant aspects of the data while filtering out noise. This reduced representation not only improves computational efficiency but also enhances prediction accuracy by preserving essential degradation patterns, leading to more robust RUL estimates and contributing to better battery management systems.

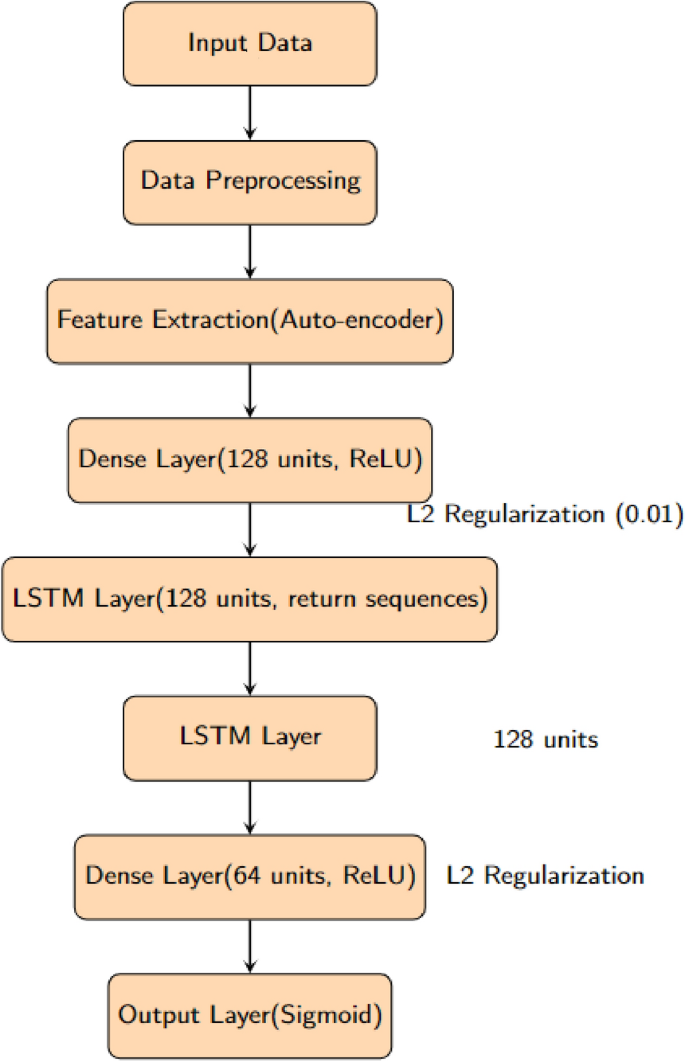

Model architecture

The architecture of the deep learning model is constructed using the Sequential model framework for predicting the RUL of Li-ion batteries. This architecture comprises several key layers, each serving a distinct purpose. Firstly, a densely connected layer with 128 units and ReLU activation is employed. To promote generalization and mitigate over-fitting, L2 regularization with a coefficient of 0.01 is applied. This layer is configured to accept input data with a shape that aligns with the reshaped training data. Subsequently, two LSTM layers, each consisting of 128 units, are incorporated into the model. The first LSTM layer is configured with the return-sequences parameter set to True, facilitating the return of sequences for subsequent layers. Following this, a densely connected layer with 64 units and rectified linear unit (ReLU) activation, along with L2 regularization, is introduced. Lastly, a dense output layer employing a sigmoid activation function and L2 regularization is included to generate predictions.

Model compilation

For model optimization, we employ the Adam optimizer and utilize the mean squared error as the chosen loss function. The model’s performance is evaluated based on the MAE since it is well-suited for regression tasks and provides a straightforward measure of prediction error.

Prevent overfitting

To prevent over-fitting and improve training efficiency, we implemented early stopping as a callback mechanism. This callback monitors the validation loss and halts training if there is no improvement over a predefined number of epochs (patience). The training process itself comprises 15 epochs, and we utilize a batch size of 32. It’s important to note that these hyper-parameters can be adjusted as needed for specific scenarios. Additionally, we retained the model weights that achieved the best validation performance. Throughout the training, a comprehensive record of the training history is maintained. This record encompasses performance metrics and loss values, which are instrumental in evaluating and interpreting the model’s efficacy. The architectural framework of the model is elegantly elucidated in Fig. 3.

Proposed model architecture for predicting remaining useful life Li-ion batteries.

After feeding the validation dataset into the model, we record the MAE values as an indication of predictive accuracy.

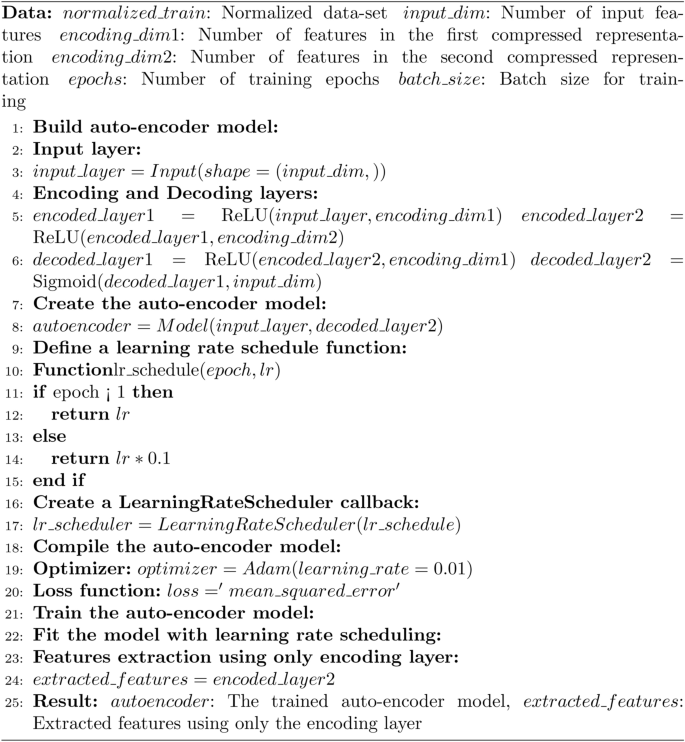

Algorithm

In Algorithm 1, the steps for feature extraction using an auto-encoder are outlined. Basically in this algorithm, an auto-encoder is employed to extract essential features from a normalized dataset. It utilizes two encoding layers with ReLU activation functions to compress the input features into more informative representations. A dynamic learning rate scheduling mechanism is introduced, gradually reducing the learning rate after the first training epoch. The resulting auto-encoder model is then compiled using the Adam optimizer with a specified learning rate and the mean squared error as the loss function.

Feature extraction using auto-encoder with learning rate scheduling.

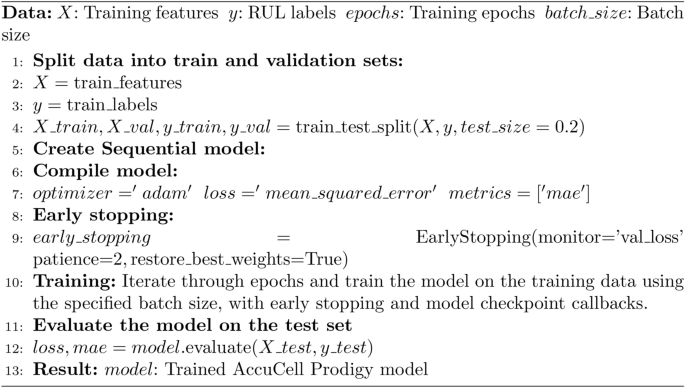

Using the Algorithm 1 features are extracted using only the encoding layer of the trained auto-encoder model. In Algorithm 2, we outline the steps for a deep learning-based improved approach for battery RUL prediction.

This algorithm outlines the process of training a deep learning model for battery RUL prediction. It commences by splitting the dataset into training and validation sets, allocating 80% for training and 20% for validation. The sequential model is then constructed, featuring a sequence of layers, including dense and LSTM layers, to capture temporal patterns. The model is compiled using the Adam optimizer, with MSE as the loss function and MAE for evaluation. Early stopping and model checkpoint callbacks are established to monitor validation loss and save the best model. The model undergoes training over multiple epochs using the specified batch size, ensuring optimal performance in RUL prediction.

This study introduces a comprehensive approach for Li-ion battery RUL prediction using deep learning with auto-encoder-based feature extraction. Beginning with meticulous data preparation, irrelevant columns are eliminated, and missing data is imputed. The dataset is divided for training and testing, followed by MinMaxScaler normalization. Feature extraction employs an auto-encoder with ReLU activation, optimizing dimensionality reduction. The model’s architecture incorporates key layers for precise RUL predictions, with optimization through the Adam optimizer, MSE loss, and MAE evaluation. Early stopping prevents over-fitting, and training history is monitored. This holistic approach advances battery technology and predictive maintenance, ensuring battery reliability across applications.

Model evaluation

We assess the performance of the proposed deep learning model for lithium-ion battery RUL prediction. The model was trained and fine-tuned based on the methods outlined in the previous section. Evaluation metrics and techniques are employed to gauge its effectiveness in predicting RUL accurately.

Evaluation metrics

To measure the model’s performance, we utilize the MAE, a common regression metric. MAE calculates the average absolute difference between the predicted RUL values and the true RUL values. This metric provides a straightforward interpretation of prediction accuracy.

Validation dataset

To validate the model’s generalization ability, we employ a separate validation dataset. This dataset was not used during the model training phase, ensuring unbiased evaluation. The validation dataset consists of a diverse range of lithium-ion batteries with distinct characteristics and operational conditions, making it representative of real-world scenarios.

link